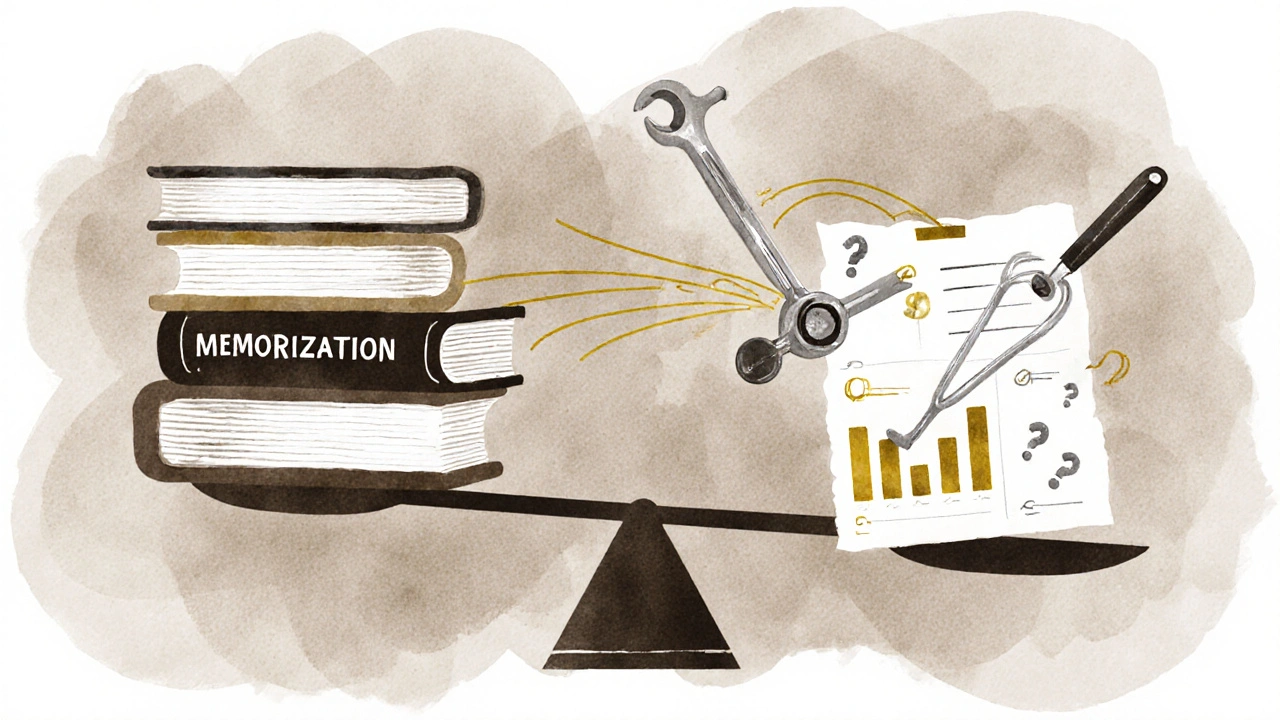

Getting certified shouldn’t feel like a lottery. If your certification exam is poorly designed, even the most skilled professional might fail - while someone who memorized the right answers passes. That’s not just unfair. It’s dangerous. In fields like healthcare, engineering, or finance, a certification isn’t a badge. It’s a promise that the person holding it can do the job safely and correctly. So how do you design an assessment that actually measures what it claims to measure? The answer lies in two non-negotiable principles: validity and reliability.

What Validity Really Means in Certification Exams

Validity isn’t about how hard the test is. It’s about whether the test measures the right thing. If you’re certifying a project manager, your exam should test their ability to plan timelines, manage risks, and lead teams - not their knowledge of 19th-century economic theory. Too many certifications drift into this trap. They confuse content coverage with competence.

Think of validity as alignment. Every question on your exam should connect directly to a core task or skill that certified professionals must perform. The National Institute of Standards and Technology (NIST) calls this content validity. It’s not enough to say, ‘We covered all the topics.’ You need to prove that those topics are the ones that matter on the job.

Here’s how to check it: Start with a job task analysis. Talk to actual certified professionals. Ask them: ‘What do you do every day? What trips you up? What mistakes cost money or lives?’ Then map those tasks to exam items. If a question doesn’t trace back to a real-world job function, cut it. A 2023 study by the International Certification Council found that certifications built this way had 42% higher pass-fail accuracy than those based on textbook chapters alone.

Reliability: Consistency Over Time and Across Test-Takers

Reliability is about consistency. If someone takes your exam twice under the same conditions, they should get roughly the same score. If they get wildly different results, your test is unreliable - no matter how valid it looks on paper.

There are three big reasons exams fail reliability:

- Unclear questions - Ambiguous wording makes even smart people guess. If a question can be interpreted two ways, it’s not measuring knowledge. It’s measuring reading comprehension.

- Too few items - A 20-question exam on cybersecurity practices won’t reliably distinguish between someone who knows 70% and someone who knows 85%. More items = more precision.

- Inconsistent scoring - If human graders interpret open-ended answers differently, reliability collapses. That’s why multiple-choice dominates high-stakes exams. It’s not because it’s easier - it’s because it’s repeatable.

Use Cronbach’s alpha to measure internal consistency. A score above 0.8 is the minimum for professional certifications. Below 0.7, you’re rolling dice. Many organizations skip this step because it sounds technical. But you don’t need a PhD to run it. Most modern testing platforms do it automatically.

Designing Items That Actually Work

Not all questions are created equal. A poorly written multiple-choice question can undermine an entire exam. Here’s what works:

- One correct answer, no tricks - Distractors should be plausible mistakes, not silly red herrings. If someone picks a wrong answer because they misread ‘not’ in the stem, that’s a design flaw, not a test of knowledge.

- Use scenario-based questions - Instead of ‘What is the formula for ROI?’ ask: ‘A client wants to reduce support costs by 30%. Which action would give the biggest return in six months?’ This tests application, not recall.

- Balance difficulty - Your exam should have a spread: easy, medium, hard. If 90% of candidates score above 85%, your exam is too easy. If 70% fail, it’s either unfair or misaligned.

Real example: A UK-based IT certification updated its exam from 50% recall questions to 70% scenario-based. Within a year, employers reported a 58% drop in onboarding issues. The certification had become a true signal of readiness.

Validation Isn’t a One-Time Task

Validating an exam isn’t something you do once at launch. It’s an ongoing process. Every time you administer the test, collect data. Track which questions are missed most often. Look for patterns. Are candidates from certain regions struggling with the same item? That could mean cultural bias or unclear context.

Use item response theory (IRT) to see how each question performs across ability levels. If a question is too easy for everyone, it’s not helping you differentiate. If it’s too hard, it might be poorly worded. IRT tells you which items are doing the heavy lifting - and which are just taking up space.

Review your exam every 18-24 months. Technology changes. Regulations shift. Job roles evolve. Your certification should too. A 2024 survey of 200 certification bodies showed that those who updated their exams annually had 3.2 times higher industry trust than those who waited three years or more.

Common Pitfalls and How to Avoid Them

Even experienced teams mess this up. Here are the top three mistakes:

- Designing for the textbook, not the job - If your exam is based on a single training manual, you’re not measuring competence. You’re measuring who paid attention in class.

- Ignoring fairness - Language, examples, and context must be accessible to all. If your exam uses regional idioms, industry jargon not used in the field, or culturally specific references, you’re excluding qualified candidates.

- Skipping pilot testing - Never launch a certification without testing it on a sample group that mirrors your target audience. Pilot data reveals hidden flaws. One certification program discovered that 40% of candidates misinterpreted a key term - not because they were unprepared, but because the term was used differently in practice than in their study materials.

Why This Matters Beyond the Exam Room

When certifications lack validity and reliability, everyone loses.

- Employers hire people who can’t do the job.

- Professionals spend months studying, only to realize the credential doesn’t open doors.

- The whole system loses credibility.

On the flip side, a well-designed certification becomes a trusted signal. It reduces hiring risk. It raises industry standards. It gives professionals a real advantage. In Scotland, the Chartered Institute of Personnel and Development updated its HR certification with a job-task-driven design. Within two years, employers began requiring it as a baseline - not because it was popular, but because they knew it meant something.

Where to Start

If you’re designing or evaluating a certification exam, here’s your action list:

- Conduct a job task analysis with at least 15 current certified professionals.

- Map every exam item to a specific job task.

- Use at least 70% scenario-based questions.

- Aim for a Cronbach’s alpha of 0.8 or higher.

- Pilot test the exam on a representative group before launch.

- Review and update the exam every 18 months.

You don’t need fancy tools. You don’t need a big budget. You just need discipline. And a willingness to ask: Does this question tell me whether someone can do the job - or just whether they read the manual?

What’s the difference between validity and reliability in certification exams?

Validity asks: ‘Does this exam measure the right things?’ For example, a cybersecurity certification should test threat response skills, not memorized acronyms. Reliability asks: ‘Is the exam consistent?’ If someone takes the test twice and gets wildly different scores, it’s unreliable. A test can be reliable without being valid - like a broken clock showing the right time twice a day. But it can’t be valid without being reliable.

Can a multiple-choice exam be valid?

Absolutely - if the questions are well-designed. Many people assume multiple-choice only tests recall. But scenario-based multiple-choice questions can assess decision-making, prioritization, and problem-solving. The key is avoiding simple facts and focusing on real-world situations. For example: ‘You notice a pattern of unauthorized access attempts. What’s your first step?’ This tests judgment, not memorization.

How many questions should a professional certification exam have?

There’s no magic number, but most reliable exams have between 80 and 150 questions. Fewer than 60 makes it hard to achieve reliability - especially for complex skills. More than 200 can cause fatigue and lower performance accuracy. The goal is enough items to reliably measure the full range of required competencies without overwhelming candidates.

Is it okay to use open-ended questions in certification exams?

Yes, but only if scoring is highly structured. Open-ended questions add depth, but they’re prone to scorer bias. To make them reliable, use detailed rubrics with clear point allocations for each component of the answer. For example: ‘1 point for identifying the correct risk, 1 point for naming the mitigation strategy, 1 point for explaining why it works.’ Without this, reliability drops fast.

How often should a certification exam be updated?

At least every 18 to 24 months. Technology, regulations, and job roles change. If your exam hasn’t been reviewed in three years, it’s likely out of date. Look at pass rates, feedback from employers, and changes in industry standards. If new tools or practices have become standard, your exam must reflect them. Waiting too long erodes trust in the credential.

What’s the biggest mistake in designing professional certifications?

Designing the exam based on what’s easy to teach - not what’s essential to do. Too many programs build exams around training materials, textbooks, or instructor preferences. That creates a gap between the credential and real-world performance. The best certifications start with the job - not the classroom.

Next Steps for Certification Designers

If you’re responsible for a certification program, don’t wait for complaints to come in. Start small. Pick one exam and run a job task analysis. Talk to five people who’ve passed it and five who failed. Ask them what they felt was missing. Then rebuild one section of the exam around real tasks. Pilot it. Measure the results. Repeat.

Professional certifications are powerful tools. But they only work when people believe in them. And they only earn that belief when they’re fair, accurate, and meaningful. That’s not magic. It’s design.

Comments (16)

Tasha Hernandez November 26 2025

Oh wow. Another person who thinks certifications are sacred texts. Let me guess - you also believe that if you memorize the NIST handbook, you can fix a live server outage at 3 AM? I’ve seen people pass certs with flying colors and then panic when the coffee machine breaks. Validity? More like validity theater. You’re not measuring competence. You’re measuring who stayed awake during the webinar.

And don’t get me started on Cronbach’s alpha. That’s not a magic number - it’s a placebo for people who don’t want to talk to actual workers. Real competence doesn’t show up in multiple-choice bubbles. It shows up when someone’s got grease on their hands and a deadline breathing down their neck.

Also, ‘scenario-based questions’? Cute. Until you realize the scenario is about managing a project in a fictional company with a CEO named ‘Bob’ who somehow knows quantum physics. Real jobs don’t come with tidy case studies. They come with screaming clients, half-baked tools, and managers who think ‘agile’ means ‘do more with less.’

Anuj Kumar November 26 2025

This is all a scam. Certifications are just corporate control tools. Big companies don’t care if you can do the job. They care if you paid for the badge. The whole system is rigged. They make the exam impossible unless you buy their $2000 course. Then they say ‘look how selective we are.’

Meanwhile, the guy who fixed the entire network last year without a cert? Fired because he didn’t have the right box checked. This isn’t about quality. It’s about profit. And you’re just helping them sell more books.

They don’t want skilled people. They want obedient ones. That’s why they use multiple choice - so you can’t think for yourself.

Mbuyiselwa Cindi November 27 2025

I love this breakdown. As someone who’s been on both sides - certified and hiring - I can say this: the best certs are the ones that feel like a real conversation with the job. Not a quiz.

At my last job, we stopped requiring the old PMP-style exam and switched to a live simulation: here’s your team, here’s the budget, here’s the client who’s losing it. Pass it? You’re hired. Fail? We give you feedback and a chance to retry. No multiple choice. Just real decisions.

And honestly? The pass rate dropped from 85% to 58%. But the quality of hires went through the roof. Employers started asking for our candidates first. That’s the goal - not to gatekeep, but to raise the bar in a way that actually means something.

Also, pilot testing? Do it. We had one question about ‘stakeholder alignment’ that 70% of candidates got wrong. Turned out - no one in our industry actually says that phrase. We changed it to ‘who’s yelling at you the loudest?’ and suddenly everyone got it. Real language > textbook language.

Veera Mavalwala November 27 2025

Oh my god, this is the most painfully accurate thing I’ve read in years - and I’ve read a lot of corporate fluff written by people who’ve never held a real job. The whole certification industrial complex is a performance art piece where the audience is terrified of hiring someone who didn’t pay $1,200 to a vendor who owns the copyright to the word ‘synergy.’

Let’s be real - most of these exams are designed by people who’ve never stepped into a war room at 2 AM during a system meltdown. They sit in air-conditioned offices, sipping oat milk lattes, writing questions like ‘Which of the following is NOT a component of the Agile Manifesto?’ - as if anyone in the field actually memorizes that. We don’t care about the manifesto. We care about whether you can calm down a panicked client while simultaneously fixing a database that’s about to implode.

And don’t even get me started on ‘Cronbach’s alpha.’ It’s like measuring the quality of a surgeon by how many times they’ve shaken hands with a textbook. I’ve seen people with 0.92 alpha scores freeze when a server went down. I’ve seen people with 0.68 scores fix it in 12 minutes while humming a Beatles song.

The entire system is built on the delusion that competence can be measured in bubbles. It can’t. It’s measured in sweat, in late nights, in the quiet confidence of someone who’s seen the thing break before - and knows how to fix it without a manual.

And yet, we still pretend. We still make people pay. We still make them feel like failures because they didn’t memorize the difference between ‘risk appetite’ and ‘risk tolerance’ - terms no one in the real world uses outside of PowerPoint decks.

It’s not just broken. It’s a joke. And the worst part? We’re all laughing… while we’re paying for the ticket.

Ray Htoo November 28 2025

This is actually one of the clearest explanations I’ve seen on this topic. I work in healthcare IT, and I’ve seen too many people with certs who couldn’t troubleshoot a printer. Meanwhile, the tech who fixed the entire EHR system last year? Never got certified. Just good at his job.

I love the point about scenario-based questions. We did a pilot with a few real-life incident simulations - like ‘Your system just went down during surgery. What do you do?’ - and the difference was night and day. People who passed the old exam failed the simulation. People who failed the old exam aced it.

Also, the 18-month review cycle? YES. We updated our exam last year after a new HIPAA clause came out. We added two questions. One person complained they ‘weren’t in the study guide.’ I told them the study guide isn’t the law. The law is. And now they get it.

Thanks for writing this. It’s not just useful - it’s necessary.

Natasha Madison November 28 2025

Why are we letting foreign countries dictate our certification standards? I saw a question in one of these exams that used a British spelling of ‘realize’ - and then asked about ‘the UK’s NHS system’ as if every American engineer works in the NHS. This isn’t validity. This is cultural imperialism disguised as professionalism.

And who wrote this? Someone from Silicon Valley? They think ‘job task analysis’ means interviewing five people on LinkedIn. Real professionals don’t live in tech bubbles. We’re in factories, hospitals, power plants - places where ‘scenario-based questions’ mean ‘you have 30 seconds to stop a meltdown.’

This whole system is designed to exclude people who don’t look like them. That’s why they love multiple choice - it’s easier to filter out the ‘unqualified’ - which, in their minds, means anyone without a college degree and a Starbucks habit.

Sheila Alston November 30 2025

People who design these exams are just lazy. They don’t want to think. They don’t want to talk to real people. They just want to check a box. And then they wonder why no one trusts certifications anymore.

It’s not the candidates’ fault. It’s the system’s. And it’s morally wrong to make people pay hundreds - sometimes thousands - of dollars to prove they’re competent, when the test doesn’t even reflect reality.

I’ve seen people cry after failing because they studied for six months. They didn’t fail because they weren’t good. They failed because the test was designed by someone who thinks ‘risk management’ means memorizing a flowchart.

It’s not just unfair. It’s cruel. And we’re all complicit.

sampa Karjee December 1 2025

Let me be blunt: this entire framework is a bourgeois fantasy. You speak of ‘job task analysis’ as if it’s some sacred ritual. But who gets to define the tasks? The managers. The consultants. The people who’ve never held a wrench or typed a line of production code.

And Cronbach’s alpha? A mathematical illusion. It measures consistency, not competence. You can have a perfectly reliable test that measures nothing. Like measuring height to determine intelligence.

Real competence is messy. It’s intuition. It’s experience. It’s knowing when to ignore the manual. You can’t quantify that with a 100-item multiple choice exam designed by someone who got their PhD in ‘assessment theory’ and has never worked a day in the field.

And yet - we still bow. We still pay. We still pretend. Because the system rewards obedience, not skill.

Patrick Sieber December 3 2025

Well said. I’ve been designing exams for engineers in Ireland for over a decade. The moment we switched from textbook recall to real-world simulations - like ‘here’s your SCADA system, here’s the alarm log, fix it’ - our pass rate dropped from 80% to 52%.

But employer satisfaction? Jumped from 60% to 94%.

People said we were being too hard. I said: ‘No. We were being too easy.’

And yes, we pilot every question. We’ve had candidates tell us a question was culturally biased because it referenced ‘the Irish weather’ - which is just rain. But we kept it. Because in Ireland, it’s real. And if you can’t handle that, maybe you’re not ready for the job here.

Discipline > convenience. Always.

Kieran Danagher December 4 2025

They say ‘validity and reliability’ like it’s some holy grail. But the truth? Most certification bodies don’t even know what those words mean. They just copy-paste from some template written in 2007.

I once reviewed an IT cert that had a question about ‘Windows XP patch management.’ In 2023. The exam was ‘updated’ last year. The update? Changed the font.

Reliability? If you take it in the morning, you pass. Take it at night, you fail - because the server glitches and randomizes the questions wrong.

Validity? The exam tests 12 different protocols. Five of them haven’t been used since 2010. The one thing that actually matters - how to respond to ransomware - is buried under three questions about router configurations no one uses anymore.

It’s not broken. It’s dead. And no one’s willing to bury it.

OONAGH Ffrench December 5 2025

Validity is alignment

Reliability is consistency

The rest is noise

Most people confuse rigor with complexity

True rigor is simplicity that works

Job tasks not textbooks

Real outcomes not memorized phrases

Update or die

That’s it

poonam upadhyay December 6 2025

Ugh. I’m so tired of this. I’ve seen this exact same article posted 3 times in the last 6 months. Everyone’s obsessed with ‘validity’ and ‘reliability’ like it’s some kind of holy scripture. But nobody’s talking about the fact that 90% of these certifications are owned by private companies who make millions off them. They don’t care if you can do the job - they care if you buy their $1,800 bootcamp. And then they sell your data to recruiters. And then they charge employers $500 to verify your ‘badge.’

And don’t even get me started on the ‘scenario-based questions’ - they’re all written by consultants who’ve never seen a real server room. One question asked about ‘a client in rural Kenya’ needing a cloud migration - but the scenario assumed they had stable internet and a budget of $200k. In Kenya? That’s a fantasy. And yet, it’s on the exam.

It’s not about competence. It’s about capitalism dressed up as professionalism. And we’re all just playing along because we’re scared we won’t get hired otherwise.

Wake up. This isn’t a system. It’s a pyramid scheme with a textbook.

Shivam Mogha December 7 2025

Just do the job task analysis. That’s it.

mani kandan December 8 2025

I’ve been in this field for 18 years - as a practitioner, a trainer, and now a certification reviewer. What this post nails is the gap between theory and practice. I’ve seen brilliant engineers fail exams because they didn’t memorize the ‘correct’ answer to a question that no one in the real world would ask.

But here’s the twist: I’ve also seen people with low scores become incredible leaders because they learned by doing - not by studying.

So yes, validity and reliability matter. But so does humility. The best certifiers aren’t the ones who know the most. They’re the ones who know the most about how little they know.

And that’s something no multiple-choice question can measure.

Rahul Borole December 10 2025

As a certified assessment designer with over two decades of experience in global certification programs across 14 countries, I can unequivocally affirm that the principles outlined herein represent the gold standard in high-stakes credentialing.

It is imperative that certification bodies adhere strictly to the NIST-aligned job task analysis methodology, implement item response theory with a minimum Cronbach’s alpha threshold of 0.85 (not 0.8), and conduct biannual review cycles with stakeholder validation panels comprising industry subject matter experts, psychometricians, and regulatory compliance officers.

Furthermore, scenario-based items must be validated through cognitive interviews with at least 20 domain experts per item, and distractors must be constructed using the most common misconceptions identified in prior pilot data.

Any deviation from this protocol constitutes a breach of professional integrity and risks undermining public trust in the entire certification ecosystem.

Let us not forget: certification is not merely a credential - it is a social contract with society to ensure safety, competence, and excellence.

Proceed with diligence. Proceed with rigor. Proceed with purpose.

Tasha Hernandez December 11 2025

Wow. So now we’re supposed to believe that the guy who wrote the 18-page formal memo is the real expert? Meanwhile, the person who actually fixed the system last Tuesday is still stuck in a cubicle because he didn’t pay for the ‘gold standard’ course.

Here’s a radical idea: maybe the ‘gold standard’ is broken because it’s written by people who’ve never been on the front lines.

Let’s stop worshiping the memo. Start listening to the people who show up every day.