Most companies spend thousands on employee training every year. But how many can say their training actually changed behavior-or improved results? If you can’t answer that, you’re not alone. The problem isn’t lack of training. It’s lack of measurement. The Kirkpatrick Model is the oldest, most trusted system for figuring out if training works. And it’s not theory-it’s used by Fortune 500 companies, government agencies, and small businesses alike to prove real impact.

What the Kirkpatrick Model Actually Is

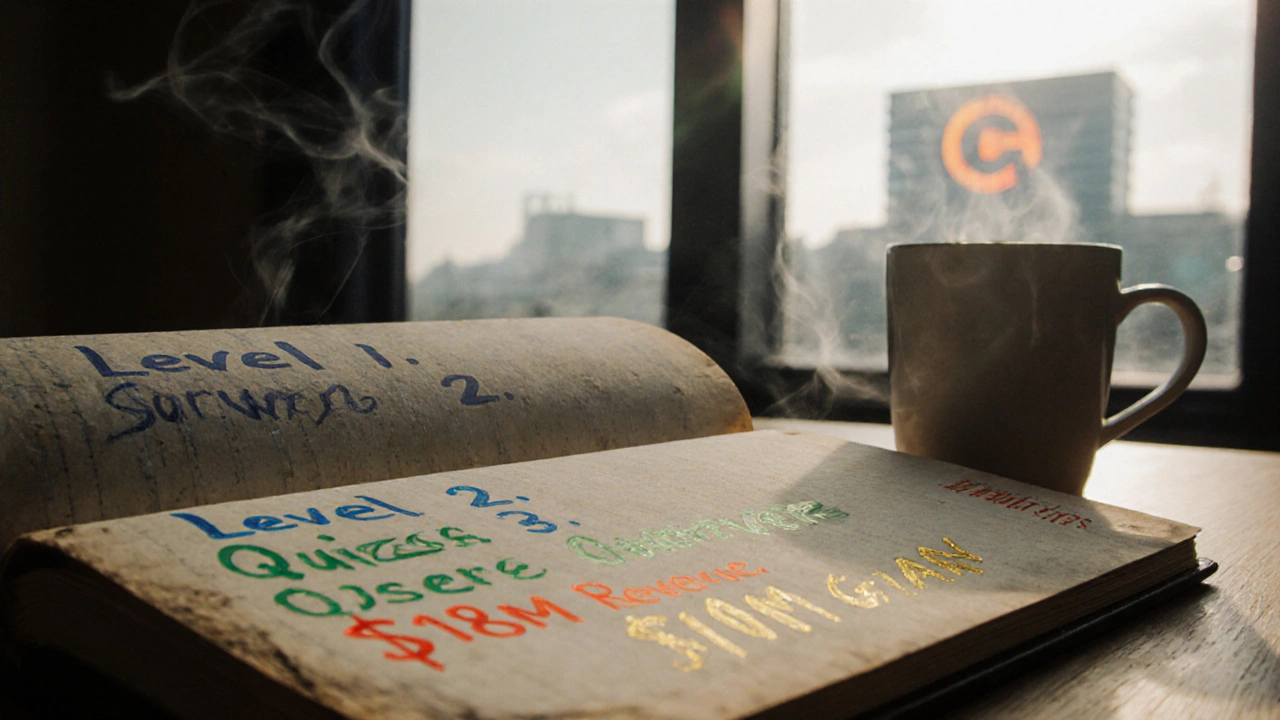

The Kirkpatrick Model breaks training effectiveness into four clear levels. It was created by Donald Kirkpatrick in the 1950s and has been updated over time, but the core stays the same. Unlike simple feedback surveys that ask, “Did you like the course?”, this model digs into what actually changed.

Each level builds on the one before it:

- Level 1: Reaction - Did participants like it?

- Level 2: Learning - Did they learn anything?

- Level 3: Behavior - Are they using it on the job?

- Level 4: Results - Did it move the business needle?

Here’s the catch: most companies stop at Level 1. They send out smile sheets and call it a day. But if you only measure reaction, you’re guessing. The real value lies in Levels 3 and 4. That’s where you prove training isn’t just a cost-it’s an investment.

Level 1: Measuring Reaction - The Easy Start

This is the most common level-and the least useful on its own. But it’s still important. You need to know if people were engaged. A bored audience won’t learn. A frustrated one won’t apply.

How to measure it:

- Post-training surveys with 5-7 simple questions

- Use a 1-5 scale: “How relevant was this training to your role?”

- Ask open-ended questions: “What was the most valuable takeaway?”

Don’t overcomplicate it. A 3-minute survey sent via email right after the session works fine. Look for patterns. If 80% say the content was “not useful,” you’ve got a problem. But if everyone says it was great, don’t celebrate yet. Level 1 tells you if the training was well-received-not if it worked.

Level 2: Measuring Learning - Did They Actually Learn?

This is where most serious training programs begin to get serious. You’re no longer asking for opinions. You’re testing knowledge.

Examples:

- Pre- and post-training quizzes with the same questions

- Skills demonstrations: “Show me how you’d handle this customer complaint”

- Case study analyses: “What would you do differently after this training?”

Let’s say you trained sales reps on objection handling. Before the session, only 32% could correctly identify the top three objections. After? 78%. That’s a clear learning gain. But here’s the trap: people can pass a test and still never use the skill. That’s why Level 2 alone doesn’t prove impact.

Level 3: Measuring Behavior - Are They Doing It?

This is where most training programs fail. People learn. But do they change? That’s the real question.

Measuring behavior means watching what people do on the job-weeks or months after training. This is harder, but it’s where ROI starts to show.

How to do it:

- Manager observations: “Have you seen your team use the new conflict resolution technique?”

- Peer feedback: Anonymous 360-degree check-ins

- Work product reviews: Are reports now following the new format?

- Tracking systems: Are employees using the new CRM fields you trained them on?

One manufacturing company trained supervisors on safety protocols. Three months later, they checked incident reports. Accidents dropped by 41%. That wasn’t luck. It was behavior change. They didn’t just learn the steps-they started following them.

Don’t wait too long. Measure behavior 30-90 days after training. Too soon? People haven’t had time to adjust. Too late? You lose context.

Level 4: Measuring Results - What Did It Do for the Business?

This is the gold standard. Did training improve sales? Reduce errors? Cut turnover? Increase customer satisfaction?

To measure this, you need to connect training to hard metrics. This is where many people give up. But you don’t need a PhD in statistics. Just pick one or two key business outcomes.

Examples:

- Customer service training → reduced call handling time by 18%

- Leadership program → 25% drop in voluntary turnover among managers

- Software training → 30% faster onboarding for new hires

- Compliance training → zero fines in the next audit

Here’s how to link training to results:

- Identify the business problem the training was meant to fix

- Collect baseline data before training (e.g., average error rate = 12%)

- Measure the same metric 6-12 months after training

- Rule out other factors: Was there a new system? A policy change? A market shift?

One retail chain trained cashiers on upselling. Before: average basket size was $42. After 6 months: $51. That’s $9 more per transaction. With 2 million transactions a year? That’s $18 million in added revenue. That’s not training. That’s profit.

How to Use the Model in Real Life - A Practical Plan

You don’t need to measure all four levels every time. But you should plan for them.

Here’s a simple 4-step plan:

- Start with the goal - What business outcome do you want? (e.g., reduce customer complaints)

- Choose the right level - If you’re testing a new software tool, Level 2 (learning) and Level 3 (behavior) matter most. If you’re rolling out a company-wide policy, go straight to Level 4 (results).

- Collect data before and after - Always have a baseline. Without it, you’re just guessing.

- Share the results - Show managers the numbers. If training reduced errors by 30%, they’ll fund the next one.

Pro tip: Use a simple dashboard. One page. Before/after metrics. Who was trained. What changed. Make it easy to understand. If your leadership team can’t get it in 30 seconds, you’re not communicating well.

Common Mistakes (And How to Avoid Them)

Even smart people mess this up. Here are the top three mistakes:

- Mistake 1: Measuring only reaction. Solution: Add a Level 2 quiz. Even a 5-question test makes a difference.

- Mistake 2: Waiting too long to measure behavior. Solution: Schedule manager check-ins at 30 and 60 days. Don’t assume people will report it.

- Mistake 3: Trying to measure everything. Solution: Pick one business metric. Nail that. Then add another next time.

Also, don’t blame the trainer if results don’t improve. Training is a tool. The real work happens when managers reinforce it. If you train people to use a new system but don’t change the performance review to include it? You’re wasting time.

When the Kirkpatrick Model Isn’t Enough

It’s not perfect. For complex, long-term skills like leadership or innovation, results take years. The model doesn’t capture soft outcomes like morale or culture change.

That’s why some companies combine it with other tools:

- Phillips ROI Methodology - Adds a Level 5: financial return on investment (cost vs. benefit)

- Brinkerhoff’s Success Case Method - Finds the best and worst cases to understand why training worked or didn’t

But for 90% of training programs? Kirkpatrick is enough. Start there. Master it. Then expand.

Final Thought: Training Isn’t an Expense. It’s a Lever.

If you can’t measure it, you can’t manage it. And if you can’t manage it, you’re just spending money hoping for the best.

The Kirkpatrick Model gives you a clear path from “we did a training” to “this training made us more profitable.” It’s not flashy. It’s not trendy. But it works. And in a world full of buzzwords, that’s rare.

Start with Level 1. Build to Level 4. Track one metric. Prove one result. Then do it again. That’s how you turn training from a cost center into a competitive advantage.

Is the Kirkpatrick Model still relevant today?

Yes. Even with AI-driven learning platforms and real-time analytics, the Kirkpatrick Model remains the foundation for training evaluation. It’s not about the tools-you still need to know if people learned, changed behavior, and improved results. Modern tech just makes measuring those levels faster and more accurate.

How long does it take to see results from Kirkpatrick Level 4?

It depends on the skill. For technical training like software use, you might see results in 3-6 months. For leadership or cultural change, it can take 12-18 months. Always set expectations upfront. Don’t expect turnover to drop after 30 days if you trained managers on coaching.

Can small businesses use the Kirkpatrick Model?

Absolutely. You don’t need a big budget. A small business can start with Level 1 and Level 3. Ask employees: “Have you used the new process?” Check sales numbers or error rates before and after. Even one clear metric proves value. Many small businesses skip evaluation-and lose funding for future training because they can’t show impact.

What’s the difference between Level 3 and Level 4?

Level 3 is about individual behavior: “Is the employee using the new technique?” Level 4 is about organizational results: “Did that behavior improve sales, reduce costs, or increase quality?” One is about the person. The other is about the business outcome.

Do I need software to use the Kirkpatrick Model?

No. Many companies use spreadsheets, email surveys, and manager notes. Software helps automate data collection and reporting, but the model itself is simple. You can start with paper forms and a calendar reminder to check in with team leads. The key is consistency-not technology.

Comments (16)

Scott Perlman November 12 2025

This model is so simple it’s almost embarrassing how often companies ignore it. Start with a survey, then check if people actually use what they learned. That’s it. No fancy software needed. Just pay attention.

Sandi Johnson November 13 2025

Oh wow, another article telling us to measure training. Shocking. I bet the author also thinks we should brush our teeth and pay taxes. Meanwhile, my company’s LMS still sends out ‘Rate this course!’ emails and calls it ‘evaluation.’

Eva Monhaut November 14 2025

I’ve seen this work in small teams where managers actually care. One team started tracking how often people used the new CRM fields after training. Three months later, data entry errors dropped by half. Not because of the training itself-but because the manager followed up. That’s the secret sauce. Training is just the spark. Leadership keeps it burning.

mark nine November 14 2025

Level 1 is useless unless you’re trying to make people feel good. Level 3 is where the magic happens. I once worked with a team that trained on safety procedures. No quizzes, no surveys. Just weekly check-ins with supervisors asking, ‘Did you use the new lockout tag?’ After 60 days, incidents dropped 50%. That’s real impact. No PowerPoint needed.

Tony Smith November 16 2025

It is imperative to recognize that the Kirkpatrick Model, while venerable and empirically robust, is frequently misapplied by organizations that mistake superficial engagement for substantive transformation. The conflation of affective response with behavioral change constitutes a systemic epistemological failure in corporate learning architecture.

Rakesh Kumar November 17 2025

In India, we do this all the time without knowing the name. We train staff, then after a month we ask: ‘Are you using it?’ If yes, we check if sales went up or complaints went down. No fancy charts. Just talking to people. Why make it complicated? The model just gave a name to what we’ve been doing for years.

Bill Castanier November 17 2025

Level 4 is the only metric that matters. If training doesn’t move a business number, it’s entertainment. Not investment. Companies that treat training like a gift instead of a lever are wasting money. One number. One goal. Prove it. Then move on.

Ronnie Kaye November 17 2025

Wait, so you’re telling me we should actually measure if people do the thing they were trained to do? Like, as in, not just smile and nod? That’s revolutionary. Next you’ll tell us to wear seatbelts and drink water. I’m so inspired I might actually implement this… maybe after lunch.

Priyank Panchal November 18 2025

You think this is hard? Try training people in a country where 70% of employees don’t read emails. You send a survey? They ignore it. You ask managers to observe? They don’t care. You want Level 4? Good luck. This model only works if your company gives a damn. Most don’t. Stop pretending.

Ian Maggs November 19 2025

Is measurement, then, not merely an act of control? The Kirkpatrick Model, in its fourfold structure, imposes a Cartesian hierarchy upon human development-reducing the ineffable, evolving nature of learning to quantifiable, linear stages. But what of the unmeasurable? The quiet transformation? The spark that never appears in a spreadsheet? Are we not, in our zeal for metrics, silencing the very essence of growth?

Michael Gradwell November 20 2025

Of course you’re gonna say this works. You probably work at a company that still uses PowerPoint and thinks ‘engagement’ means free donuts. If your training didn’t fix your broken culture, your whole system is broken. Stop blaming the model. Blame the idiots who run it.

Flannery Smail November 21 2025

Actually, I’ve seen companies measure Level 4 and still have terrible results. The metric was wrong. The baseline was fake. The ‘improvement’ was just noise. So yeah, this model sounds great. But in practice? It’s just another way to lie to yourself with charts.

Emmanuel Sadi November 22 2025

Everyone says this works. But where are the real case studies? Not the PR fluff from big consultancies. I want raw data from a company that didn’t cherry-pick results. Show me the 50% of programs that failed. Show me the managers who ignored the training. Show me the numbers that didn’t move. Until then, this is just corporate gospel.

Nicholas Carpenter November 22 2025

I’ve used this with remote teams across time zones. We started with Level 3-asked managers to note one behavior change per week. Didn’t need fancy tools. Just a shared doc. After six months, we had clear patterns. People were using the new process. Sales went up. We didn’t need Level 4 to know it worked. Level 3 was enough to justify the next round.

Chuck Doland November 23 2025

The Kirkpatrick Model, when implemented with fidelity and rigor, constitutes a paradigm of empirical evaluation in organizational learning. Its hierarchical structure ensures that causal attribution remains defensible, and its temporal sequencing permits longitudinal analysis. However, its efficacy is contingent upon institutional commitment to data integrity, managerial accountability, and the elimination of cognitive biases in outcome interpretation. To apply it superficially is not merely ineffective-it is ethically indefensible.

Scott Perlman November 25 2025

Chuck just wrote a novel. I just said: check if people use it. Both are right. But only one gets read.