When you run a business course - whether it’s an online MBA, a weekend workshop, or a corporate training program - you’re not just teaching. You’re running a product. And like any product, it needs to be measured, tested, and improved. Too many course creators rely on gut feelings: "It went well," or "Students seemed engaged." But if you don’t track what’s actually happening, you’re flying blind. The difference between a course that fades away and one that grows? Data.

What KPIs Actually Matter for Business Courses

Not all numbers are created equal. You don’t need to track every click. You need the ones that tell you if your course is working. Here are the three KPIs that actually move the needle:

- Completion rate: What percentage of enrolled students finish the course? If it’s below 40%, something’s off. Maybe the content is too long, the pacing is off, or the first module doesn’t hook them.

- Engagement depth: How many videos do they watch? How many quizzes do they attempt? How often do they return? A student who watches 80% of videos and submits all assignments is far more likely to recommend your course than one who logs in once.

- Outcome satisfaction: Ask them. Not with a 5-star rating. Ask: "What’s one thing you’ve applied in your job since taking this course?" Real-world application is the gold standard. If students can’t name one useful takeaway, your course isn’t delivering value.

These aren’t vanity metrics. They’re survival metrics. A course with 70% completion and 90% outcome satisfaction will grow through word-of-mouth. One with 20% completion and 30% satisfaction? It’ll die quietly.

Building Dashboards That Actually Get Used

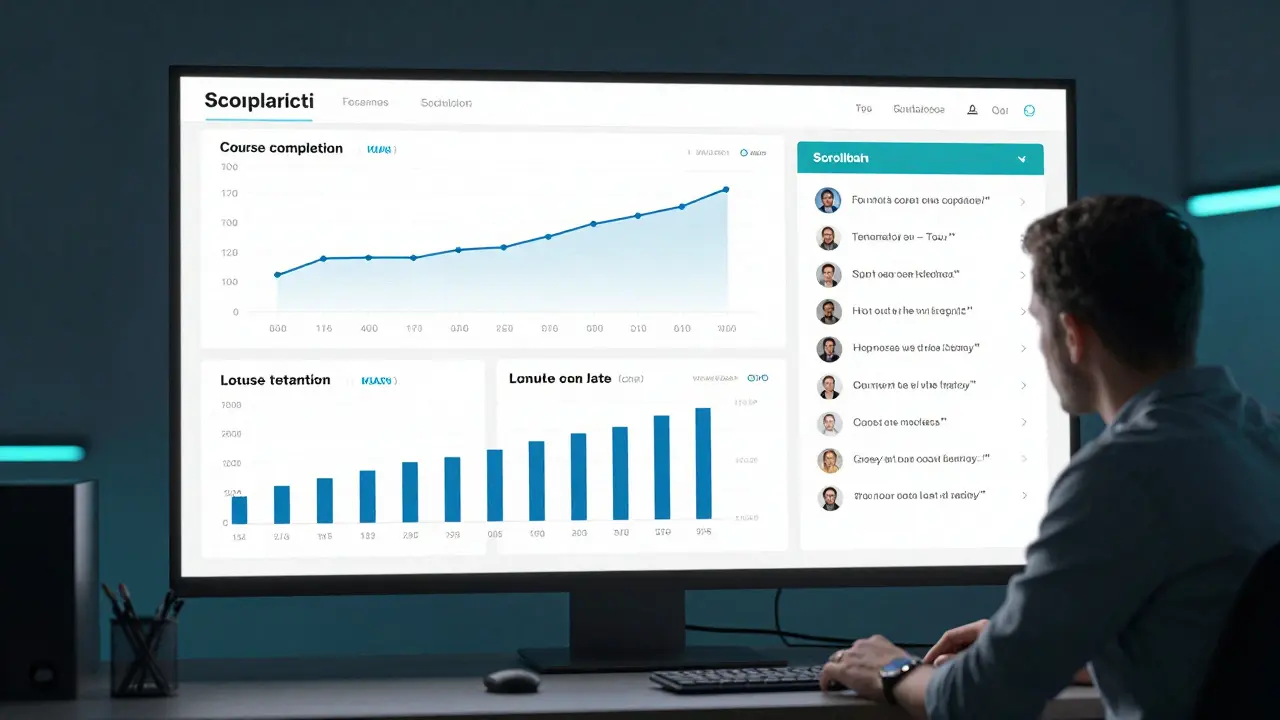

Dashboards aren’t for showing off. They’re for making decisions. A good course dashboard tells you three things in under 10 seconds:

- Where are students dropping off?

- Who’s excelling - and who’s falling behind?

- What’s the trend over time? Are results getting better or worse?

Most dashboards fail because they show too much. A wall of charts doesn’t help - it paralyzes. Here’s what a working dashboard looks like:

- A single line graph showing weekly completion rates over the last 12 months.

- A bar chart comparing quiz scores by module - highlighting the one with the lowest pass rate.

- A list of the top 5 student comments from feedback forms, updated weekly.

At a course I helped redesign last year, we cut the dashboard from 14 charts to 3. Within two months, instructors started checking it daily. Why? Because they could act on it. One module had a 65% failure rate. We rewrote it. Within a month, pass rates jumped to 89%.

Don’t build dashboards for your team. Build them for the person who needs to fix something.

Running Experiments Like a Startup

Business courses don’t have to be static. You can test changes the same way a tech startup tests a new feature. Here’s how:

Let’s say you notice students aren’t finishing the leadership module. Instead of guessing why, run a small experiment:

- Version A: Original 45-minute video lecture.

- Version B: Three 10-minute videos with interactive reflection prompts after each.

Roll out Version B to 20% of new students. Keep Version A for the rest. Track completion, quiz scores, and feedback. After two weeks, you’ll know which version works better - no opinion, no guesswork.

Another experiment: Change the timing of feedback. Some courses send automated feedback right after a quiz. Others wait 48 hours. We tested both. Waiting 48 hours increased revision rates by 37%. Why? Students had time to think. They didn’t just click "submit" and move on.

Experiments don’t require a big budget. They require curiosity. One course creator I know tested sending a short voice note to students who hadn’t logged in for 3 days. Response rate jumped from 8% to 41%. It cost her 20 minutes a week.

Connecting the Dots: KPIs, Dashboards, and Experiments Together

KPIs tell you what’s happening. Dashboards show you where to look. Experiments let you fix it.

Think of it like a car. KPIs are the dashboard lights - engine temperature, fuel level, tire pressure. The dashboard is the actual gauge panel. Experiments are the mechanic’s toolkit.

Here’s how they work together in practice:

- You notice completion rates are dropping. (KPI)

- You check your dashboard and see the drop happens right after Module 3. (Dashboard)

- You run an A/B test: swap Module 3’s content for a shorter, case-study version. (Experiment)

- Completion rates rise by 22%. You roll it out to everyone. (Action)

This loop - measure, observe, test, act - turns your course from a static product into a living system. It adapts. It improves. It grows.

Common Mistakes to Avoid

Even with data, people mess up. Here are the three most common mistakes:

- Measuring activity instead of impact: Track how many logged in? That’s easy. Track how many changed their behavior? That’s hard - but it’s the only number that matters.

- Ignoring qualitative data: Numbers tell you what happened. Open-ended feedback tells you why. One student saying, "I didn’t understand how to apply this to my team," is worth 1000 quiz scores.

- Waiting too long to act: If you wait three months to fix a module because "we’ll review it next quarter," you’ve already lost hundreds of students. Fix it in two weeks. Test it. Move on.

There’s no magic tool. No secret software. Just discipline. Check the numbers. Ask why. Test one thing. Repeat.

Where to Start Today

You don’t need to overhaul everything. Start with one module. Pick one KPI. Set up a simple dashboard. Run one experiment.

Here’s your 7-day plan:

- Day 1: Identify the module with the lowest completion rate.

- Day 2: Pull the data - how many started it? How many finished? What was the average quiz score?

- Day 3: Add one chart to your dashboard showing just that module’s completion trend over time.

- Day 4: Send a short survey: "What was the hardest part of this module?" (One question, 30 seconds max.)

- Day 5: Pick one change based on the data - shorten it? Add an example? Change the order?

- Day 6: Roll out the change to 10% of new students.

- Day 7: Compare results. Did it help? If yes, roll it out. If not, try something else.

You don’t need to be an analyst. You just need to care enough to look.